Before we try to figure out what the future of AI might look like, it’s helpful to take a look at what AI can already do.

This memo will give a selection of some of the most impressive results from current state of the art AI systems, to give you a sense of the current state of AI capabilities.

ML systems today can only perform a very small portion of tasks that humans can do, and (with a few exceptions) only within narrow specialties (like playing one particular game or generating one particular kind of image).

That said, since the increasingly widespread use of deep learning in the mid-2010s, there has been huge progress in what can be achieved with ML.

PaLM (Pathways Language Model) is a 540 billion parameter language model produced by Google Research. It was trained on a dataset of billions of sentences that represent a wide range of natural language use cases. The dataset is a mixture of filtered webpages, books, Wikipedia, news articles, source code, and social media conversations.

PaLM demonstrates impressive natural language understanding and generation capabilities. For example, the model can distinguish cause and effect, infer conceptual combinations in appropriate contexts, and even guess a movie from an emoji.

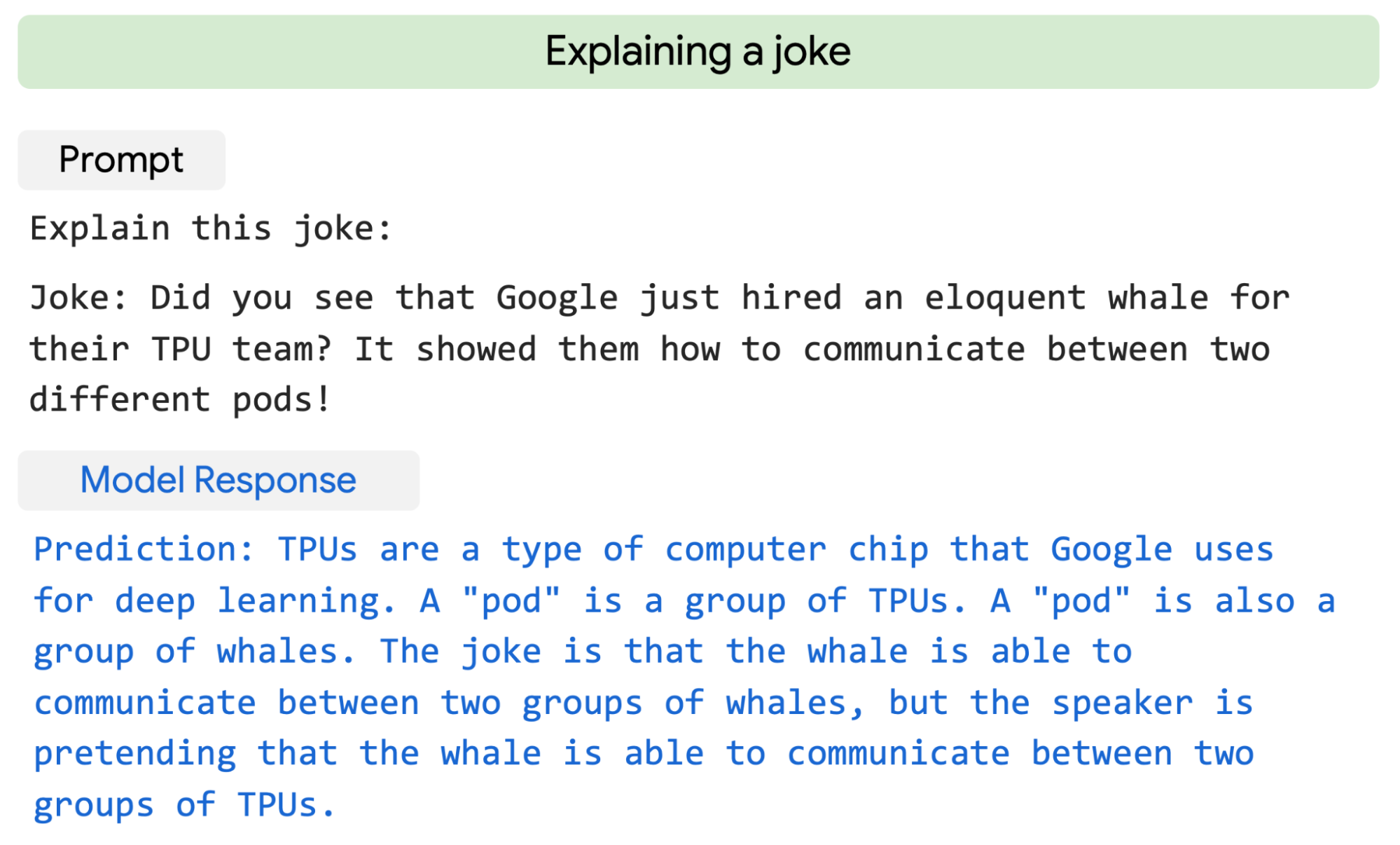

PaLM can even generate explicit explanations for scenarios that require a complex combination of multi-step logical inference, world knowledge, and deep language understanding. For example, it can provide high quality explanations for novel jokes not found on the web.

Google tested various different sizes of PaLM. As the scale of the model increased, the performance improved across tasks and also unlocking new capabilities (such as the ability to output working computer code, do common-sense reasoning, and make logical inferences).

PaLM 540B (the largest model) generalises it’s ability to understand language to be able to successfully complete coding tasks, such as writing code given a description (text-to-code), translating code from one language to another, and fixing compilation errors (code-to-code). PaLM shows strong performance across coding tasks even though code represented only 5% of the data in its training dataset.

Minerva is a model that is built based on the Pathways Language Model (PaLM), but with further training on a 118 GB dataset of scientific papers and web pages that contain mathematical expressions.

It was built to solve mathematical and scientific questions like the following:

The input to Minerva is the question, exactly as you see here. It’s worth recalling that Minerva is designed only to predict language and has no access to a calculator or any other external tools.

Given the above input, here is what Minerva outputs (correctly):